What’s different as we’re almost approaching the end of 2024?

It seems like everyone is talking about DORA metrics. Whether it’s engineering teams proudly sharing how they’ve improved, or leaders stressing over whether they’re hitting the right targets, DORA metrics have become a buzzing topic in DevOps conversations.

This is definitely a step in the right direction.

But, as with anything that gains rapid traction, there’s often confusion lurking beneath the surface. Engineering teams are grappling with questions that don’t always have straightforward answers. How accurate are these metrics? Are we measuring the right things? Can DORA really work for us?

The reality is that misconceptions or myths around DORA metrics can quickly lead you down the wrong path.

Fortunately, we’ve got you covered. In this blog, we’re not just going to debunk a few common myths—we’ll also dig into the subtleties and gray areas that often get overlooked.

If you’re new to DORA metrics, consider starting with our full guide to DORA Metrics to get familiar with the basics before getting any deeper into this conversation.

But for now, let’s peel back the layers and get into the six common misconceptions about DORA metrics and the subtle ways your engineering teams can avoid these misconceptions before they affect your performance. You might find that the outcomes are not as black and white as it seems.

Most Common Myths about DORA Metrics

Myth #1: DORA Metrics Are Only for Large, Mature DevOps Teams

Many think that DORA metrics are best suited for large-scale organizations with fully developed DevOps practices. But this couldn’t be further from the truth. In fact, smaller engineering teams or those just starting their DevOps journey can reap significant benefits from DORA metrics.

Here’s where things get interesting—DORA metrics might actually help smaller engineering teams accelerate their growth in ways that larger teams cannot. Think of it this way: smaller engineering teams have fewer layers of bureaucracy, which means they can adapt faster. Larger, mature engineering teams may have robust processes, but their size and complexity often slow down changes. Smaller engineering teams, by implementing DORA metrics early, can quickly iterate, identify bottlenecks, and course-correct, using these metrics as a scaffolding to build scalable, efficient DevOps practices.

It’s not about whether your engineering team is “ready” for DORA—it’s about how ready you are to make smarter decisions based on real data, no matter your size.

Next, let’s explore a myth that misinterprets the role of speed in DORA metrics.

💡Also Read: How To Drive DORA Metrics To Drive DevOps Success

Myth #2: DORA Metrics Are Solely About Speed

When DORA metrics get introduced, we’ve often seen engineering teams latch onto the glamorous idea of deployment frequency and lead time for changes.

Everyone wants to move faster, right? But here’s where the conversation gets deeper: speed without quality is like trying to win a race with a car that’s falling apart.

The truth is, DORA metrics are not just about moving fast—they’re about moving fast and steady. High-performing teams don’t just deploy quickly; they deploy with confidence, knowing that stability and reliability aren’t being sacrificed. Think of it like this: would you rather ship ten buggy releases in a week, or five rock-solid ones that don’t require constant rollbacks?

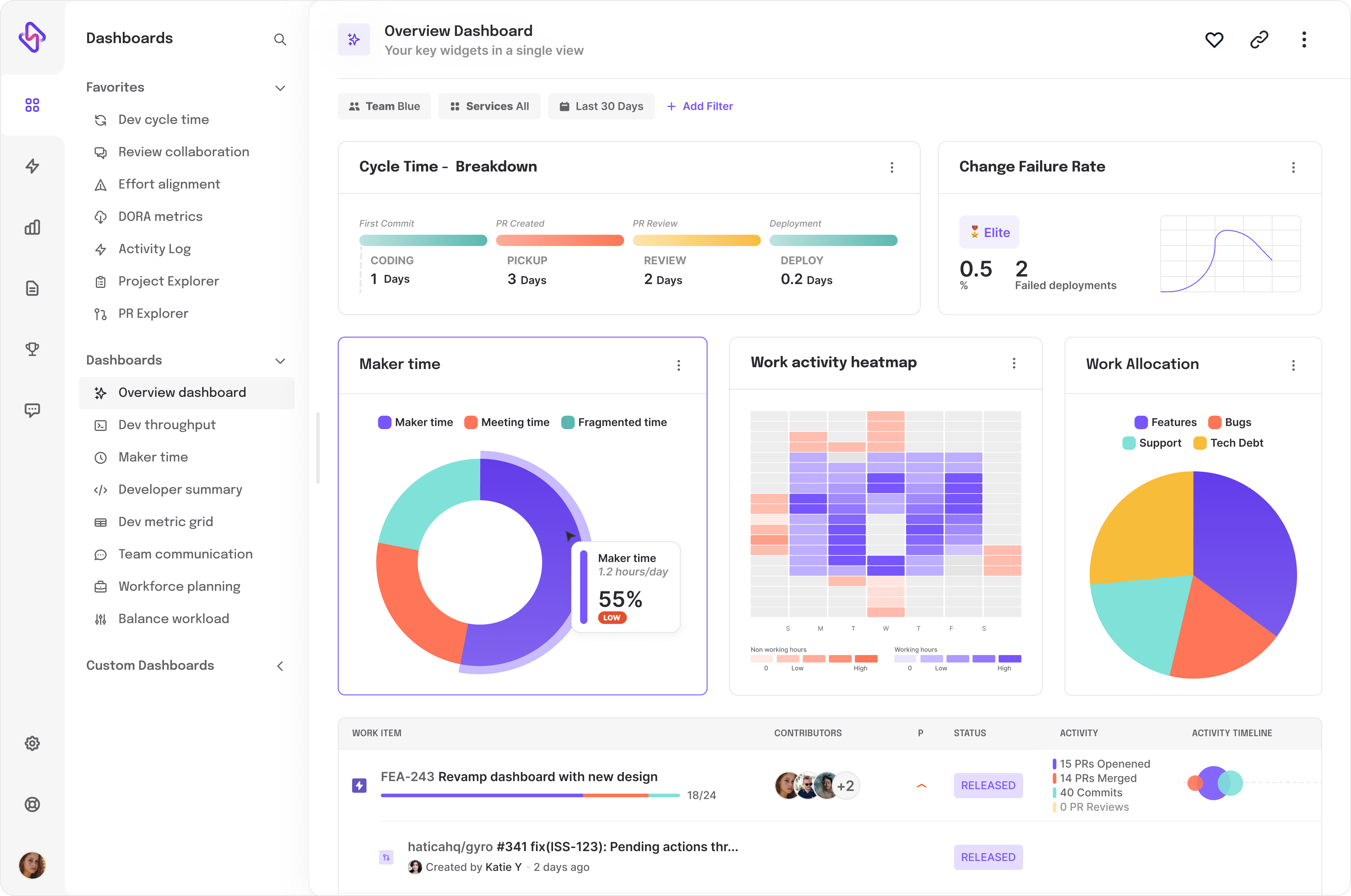

This nuance is crucial because it’s easy to celebrate deployment frequency without realizing your change failure rate is climbing. DORA metrics are designed to balance speed and quality. The trick isn’t to move fast for the sake of speed but to optimize the process so you can maintain velocity while keeping the product stable.

Myth #3: DORA Metrics Only Apply to Production Environments

It’s tempting to think DORA metrics are most useful for measuring performance in production. After all, that’s where the real impact happens, right? But the truth is that the performance of your production environment is largely determined by what happens earlier—during development and staging.

Here’s an insight that often surprises teams: your non-production environments can provide early signals of performance issues long before they hit production. If your deployment frequency is smooth in development but grinds to a halt in production, that’s a sign that your pipeline has hidden inefficiencies. Similarly, if changes are failing repeatedly in staging, it’s a precursor to trouble down the road.

The beauty of DORA metrics is that they give you a full view of your pipeline’s health. By measuring across all environments, you can catch problems early, refine your processes, and improve overall stability. Engineering teams that wait until production to start measuring are often playing catch-up, putting out fires that could have been prevented with better monitoring earlier in the process.

Now that we know DORA metrics apply across environments, let’s consider why focusing on just one metric might give you a false sense of progress.

Myth #4: Focusing on One Metric Guarantees Success

Ever heard the phrase “what gets measured gets improved”? It’s true—but if you only focus on one metric, you’re missing the bigger picture.

We’ve talked at length about some engineering teams who think improving a single DORA metric, thinking that success in one area will naturally lead to better overall performance. But in reality, focusing too much on just one metric—whether it’s deployment frequency or MTTR—often leads to an imbalance.

Think of it this way, your engineering team increases deployment frequency by 50%. Sounds great, right? But what if, at the same time, your change failure rate doubles? Now you’re deploying fast, but half of those deployments are introducing bugs or breaking features. You’ve gained speed but at the cost of stability. This is the danger of tunnel vision. DORA metrics are designed to be considered a holistic system, where each metric influences the others.

The key isn’t just to improve one metric—it’s to strike a balance where speed, quality, and reliability all improve together. Engineering teams that truly succeed with DORA metrics understand that it’s not about winning the battle with one number; it’s about orchestrating all the metrics so they complement each other.

The flexibility of DORA metrics, often overlooked, is one of their greatest strengths.

This leads us to our next, and very important point.

Myth #5: Change Failure Rate Should Always Be Zero

We all want perfection. But in complex systems, aiming for a zero change failure rate is not just unrealistic—it can be dangerous. The more you focus on eliminating every single failure, the more risk-averse your engineering team becomes. And risk-aversion? That kills innovation faster than failure ever could.

This might sound overly obvious but failures aren’t the enemy. How you respond to them is what matters. Sure, reducing your change failure rate is important, but obsessing over it can stifle creativity and create a culture where no one wants to try new things. The goal should be to reduce the impact of failures, not to eliminate them entirely.

Myth #6: There’s a “Perfect” DORA Benchmark

Benchmarks are helpful, right? They give you a reference point to see how your engineering team is doing compared to others. But here’s where things get tricky: there’s no universal DORA benchmark that works for every engineering team.

What’s working for one company could be completely irrelevant—or even harmful—for yours. Your industry, team size, product maturity, and roadmap all impact what “good” looks like for your engineering team. A fast-growing startup with a rapid release cycle is going to have very different DORA metrics than a healthcare company with strict compliance requirements. So chasing someone else’s numbers could lead you down the wrong path.

Instead of blindly following benchmarks, focus on continuous improvement. DORA metrics should be a tool for reflection, not competition. The real question isn’t how you compare to others—it’s how much better you can be than you were last quarter.

Now that we’ve discussed the most common misconceptions about DORA, well, what can we do to combat it?

How to Overcome These Misconceptions and Maximize the Value of DORA Metrics

Now that we’ve gone over the myths, how do you make sure your engineering team doesn’t fall into the same traps? How can you use DORA metrics without getting misled by just the numbers? The true power of DORA metrics isn’t in perfect scores—it’s in how you understand and apply what they tell you. This section will show you how to do just that.

1. DORA Metrics Aren’t Just for Tracking—They Help You Discover Hidden Insights

If you’re waiting for your engineering team or organization to grow before using DORA metrics, you’re missing the point. You don’t need DevOps maturity to use DORA metrics—they help build it.

The beauty of DORA metrics is that they’re as much about discovery as they are about measurement. Think of them as a mirror, not a ruler. It’s less about how you compare to others and more about what these metrics reveal about your engineering team’s workflows.

2. Speed Alone Can Be a Red Herring—It’s Controlled Velocity That Matters

Speed is appealing. The faster you can ship, the better—right? Not quite. Speed without direction is just velocity toward failure. It’s not about how fast you can go; it’s about how consistently you can go fast while maintaining stability. Fast deployments are only valuable if they don’t create more work downstream. High-performing engineering teams understand that DORA metrics aren’t asking for maximum speed—they’re asking for controlled, repeatable velocity. Shipping ten buggy releases in a week is worse than shipping five rock-solid ones. So, don’t just chase speed—chase stability in speed.

3. Track Across the Whole Pipeline—Not Just Where It’s Obvious

Production issues are usually symptoms, not the root cause. The problems you see at the end of the process often start much earlier. By the time you’re putting out fires in production, the real issue has already crept through your development and staging environments.

If your team is only tracking DORA metrics in production, you’re essentially catching problems when it’s already too late. The real value comes from monitoring these metrics throughout the entire pipeline—from development to testing to staging—so you can spot bottlenecks and inefficiencies before they become costly production issues.

For example, if your deployment frequency looks great in production but falls apart in staging, that’s a clear sign your pipeline has hidden inefficiencies. Maybe tests are taking too long, or perhaps manual review processes are creating bottlenecks. These issues don’t magically resolve themselves in production—they escalate. By measuring and analyzing DORA metrics across all stages, you can uncover these warning signs early and take action before they affect your end users.

4. Don’t Get Caught Up in Benchmarks—Your Context Matters Most

There are lots of articles that praise the latest DORA benchmarks, but those numbers might not apply to your engineering team at all. Trying to hit someone else’s benchmark is like wearing someone else’s suit—it might look good on them, but it won’t fit you.

The takeaway? DORA metrics aren’t about what you “should” be achieving—they show what’s possible for your engineering team. Your industry, your product’s maturity, and your organization’s culture in all influence what success looks like for you. Instead of chasing a perfect benchmark, focus on how these metrics can help you improve over time.

DORA Metrics: A Flexible Framework for Real Growth

DORA metrics aren’t a rigid set of rules—they’re more like a compass than a fixed map. They won’t give you exact directions, but they’ll help guide your engineering team in the right direction. And like any tool, the real value isn’t just in tracking numbers—it’s in how you use the insights to make improvements.

What counts as “good” DORA metrics for your engineering team today might look completely different a year from now—and that’s okay. In fact, that’s exactly how it should be. As your engineering team expands and faces new challenges, and changes, your goals and priorities will naturally shift too. The strength of DORA metrics is in their flexibility—they can adapt to your team’s needs and show where you’re improving and where you might need to make adjustments.

Instead of chasing a universal “perfect” number, DORA metrics help you improve within your team’s unique context. If you’re ready to integrate DORA metrics into your workflow, then our productivity experts are the people who can guide you every step of the way.