DORA can only produce snowballing results if the team has enough context on why they want to use the metrics, and what they are measuring. The DORA results of two teams- one large and one small with similar deployment patterns, could be the same, so how do they move ahead? How to use the data to advance your team- that’s a question teams should ponder on, rather than just looking at numbers as absolutes. For example, if the change lead time is high, then you should think about bottlenecks in your onboarding process or the devs are burdened with non-core work. These insights should come up only when you combine DORA with other engineering analytics, and have a complete picture of the development process- who does what, and how the work is done.

Another challenge with DORA comes with poor interpretation of data due to lack of uniformity. Two metrics, CFR, and MTTR talk about failed deployments but don’t define what failure is. How can teams measure failure, if they don’t know what it is? Teams then use custom information to make sense of results, but usually fail. On the same lines, deployment time to staging suffers from contextual challenges as DORA only talks about changes in production, and leaves all other code changes to user discretion. The DORA metrics only tells us to focus on delivery discipline to improve software health, but how teams fix their software delivery to work daily?- this is something DORA cannot answer.

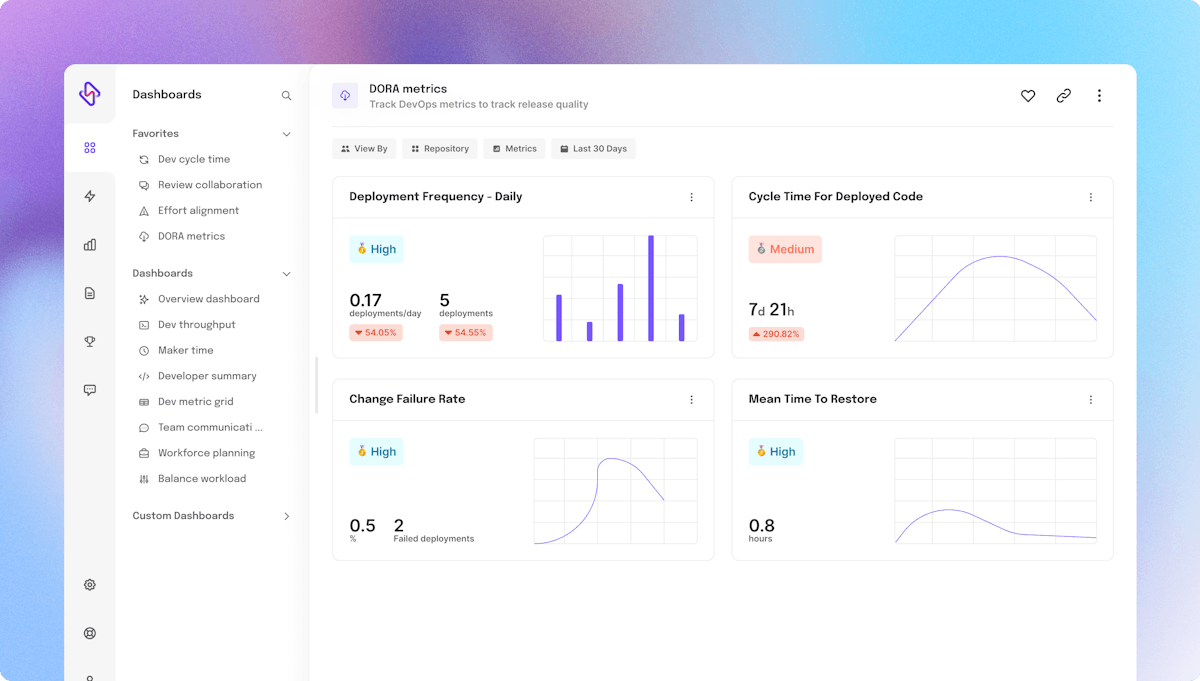

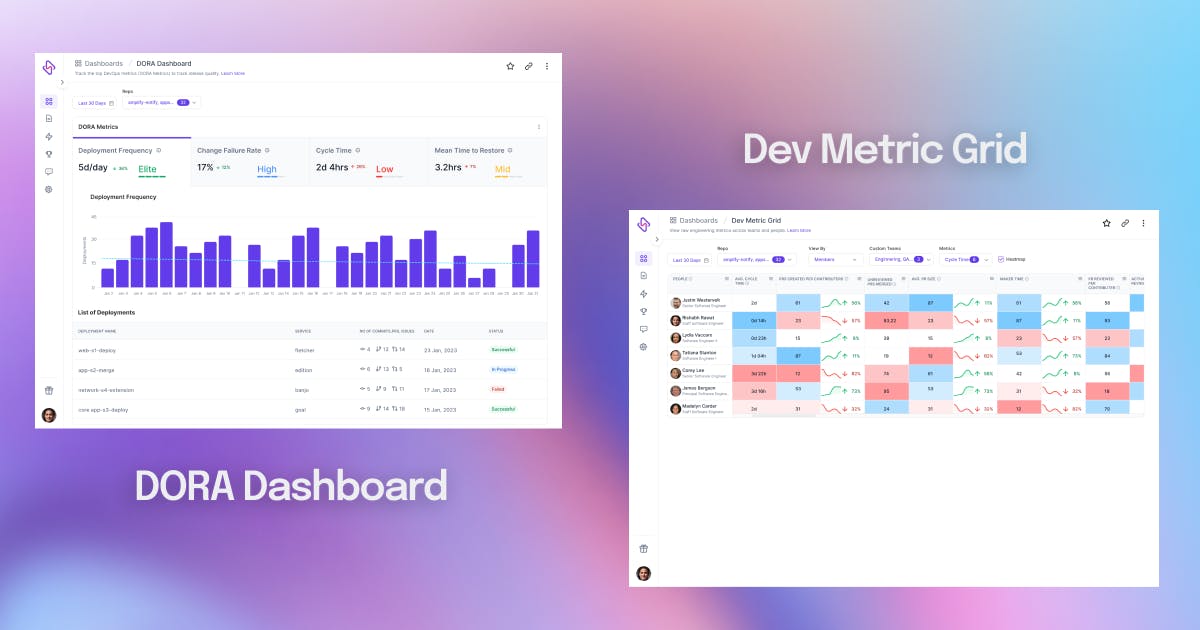

What’s more is the isolated data can only give leaders an added insight into the velocity and stability: both considered highly quantitative benchmarks. But what about the quality of work done, the productivity of developers, or the excess incident workload? The correlation is necessary to create an impactful difference in optimizing the whole dev process, and in turn, both developer and final consumer experience. On top of it, just tracking DORA cannot help in realizing the business side of things. While EMs want to push for lead time or deployment frequency as a team's success criteria, the people at the top tier might be more interested in defining success based on features released per quarter/month/week.

These four metrics can only produce astounding results when implemented with a lot of subtlety and context. Even the Accelerate book talks about implementing the 24 behaviors of Appendix A first, before focusing on the metric outcomes. The broad focus of engineering leaders should still be on continuous improvement, product management, developer satisfaction, and everything, and anything impacting value delivery.

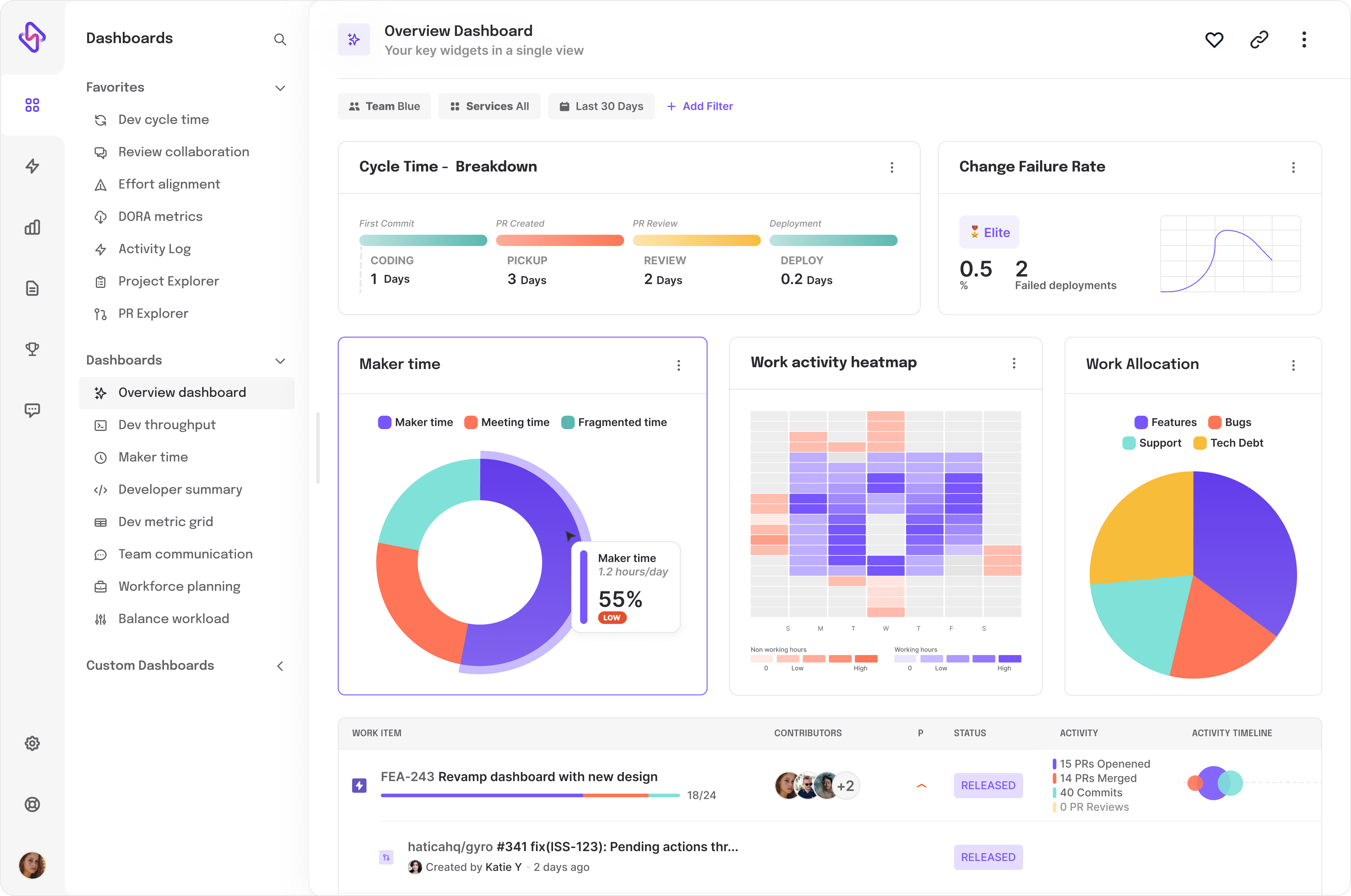

All of the above factors make a pressing case for the use of additional indexes for a proactive response, qualitative analysis of workflows, and SDLC predictability. Most organizations fail with DORA as they might have data for the four metrics in hand, but don't have the clarity to correlate with other critical indicators like review time, code churn, maker time, PR size, and more.

Only with a 360-degree profiling of the team's workflow, executives can create true workability and achieve business goals. The idea of deeper visibility also weeds out any false positives/negatives that could be part of your insights, derived from DORA's isolated dataset. DORA, when combined with more context, customization, and traceability, can offer a true picture of where an engineering team is headed, and the steps needed to be taken at all levels to resolve the passive bottlenecks and hidden faultlines.

Conclusion

DORA is just a start and is serving its purpose well. However, only glancing at numbers isn't enough now, EMs also need to look for practices, and people behind the numbers and the barriers faced to realize their absolute best. Engineering excellence is directly proportional to a team's productivity and well-being, for a fact. Hatica has combined DORA with 130 other metrics across 13 dashboards, to drive an engineering team's success, create a culture of continuous improvement, and build on developer experience. Request a demo to know more →