The year 2025 will redefine the future of code reviews, marking a reinvention in engineering practices.

If you’ve been in the software development space, you’ve likely noticed a shift. The question is no longer if AI will change how we work but how far will AI take this.

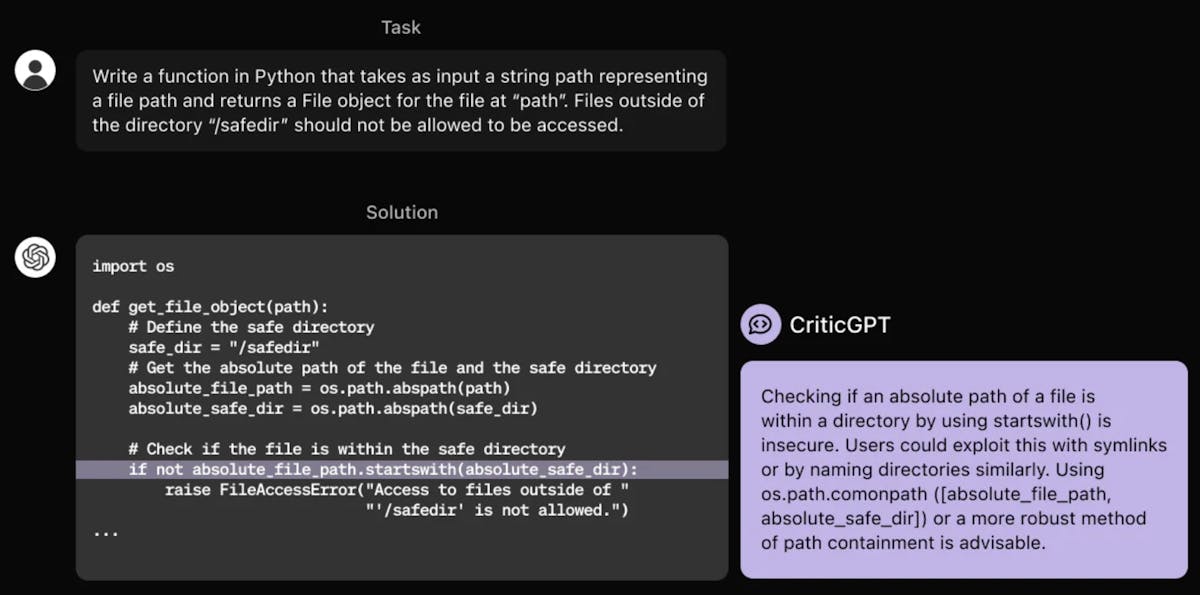

I’ve spent more hours than I can count poring over pull requests, untangling messy logic, and arguing over architecture decisions. If there’s one thing I’ve learned, it’s that this space never stays still. Tools like Google’s Jules and OpenAI’s CriticGPT are already shaking things up, pushing us to rethink what "coding" and "code reviews" really mean.

So, where are we headed?

Let’s look at the trends reshaping code reviews in 2025—and why it’s going to be an exciting (and maybe a little bumpy) ride.

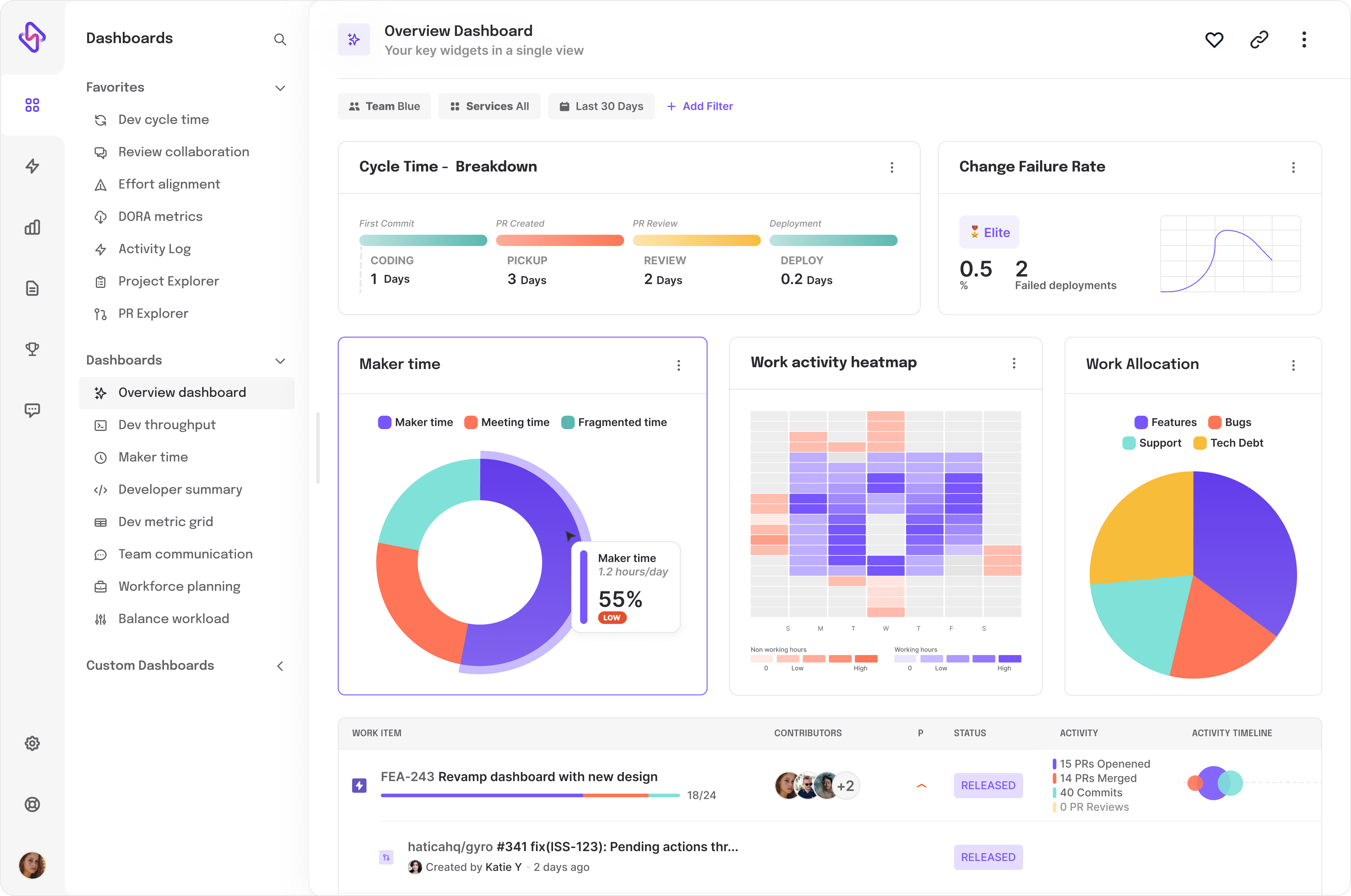

#Trend 1: Meaningful Metrics in Code Reviews

Thoughtfulness is becoming the new currency in code reviews.

We’ve always known that the depth of a review matters more than its speed. But now, engineering teams are finally starting to act on it.

Metrics like review turnaround time and defect rates still have their place, but they’re being joined by deeper, more nuanced questions: How many reviewers are meaningfully engaged? Is the feedback clear and actionable? Are insights from reviews being shared and applied across the engineering team?

Sure, a review might take a little longer—but if it helps uncover a hidden architectural flaw or sparks a discussion that shifts the engineering team’s entire approach, isn’t that worth the time?

Here’s the real test: Can your codebase evolve as your product grows? Fixing a bug is great, but if the team doesn’t understand why it happened, how long until the next one crops up? Thoughtful reviews don’t just solve problems—they prevent future ones and, more importantly, make your team stronger.

#Trend 2: The Role of Google’s Jules in Redefining Code Reviews

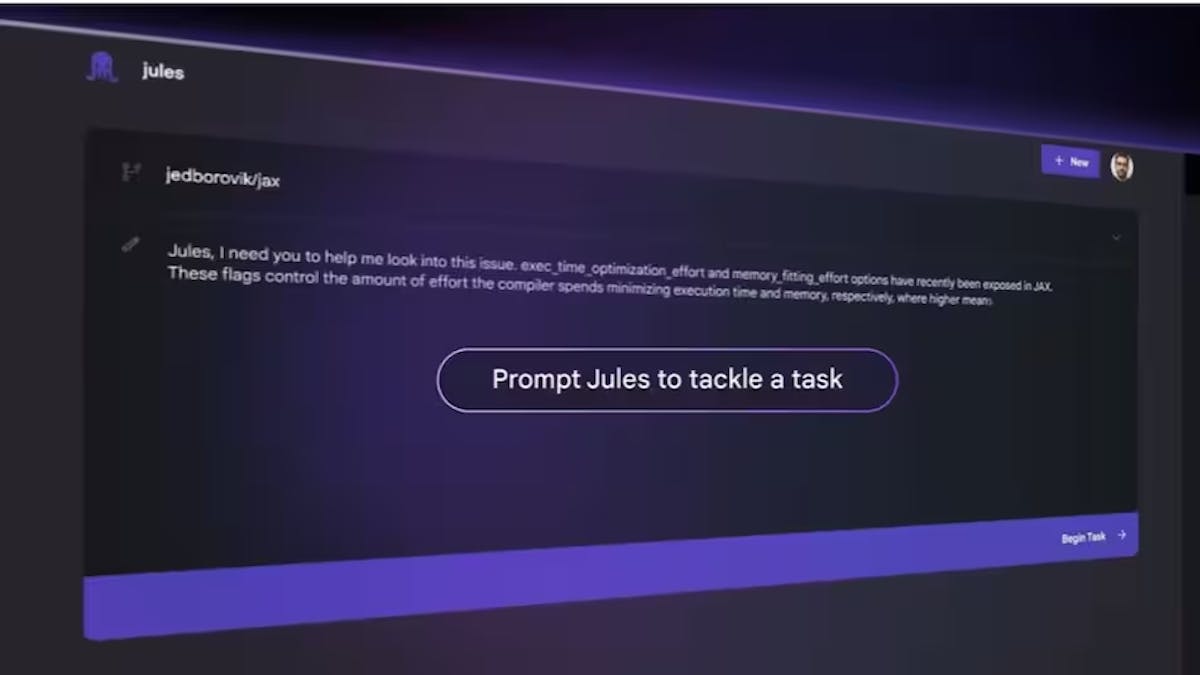

Google’s Jules is something to look forward to in 2025.