As engineering leaders, we all strive to build high-performing software teams that ship exceptional software. Metrics are our guiding lights, helping us track progress, identify areas for improvement, and ultimately achieve our goals. But what happens when those very metrics we rely on become unreliable? It is even worse when they become our sole targets without realizing the overall efficiency of the process and effectiveness of the product shipped. Enter Goodhart's Law.

Coined by economist Charles Goodhart, this law states that "when a measure becomes a target, it ceases to be a good measure." In simpler terms, focusing too heavily on a single metric can lead to unintended consequences. Let's illustrate this with a common scenario.

Imagine a team laser-focused on driving down the number of bugs reported. This might seem like a win at first. But what if, to achieve this target, the team starts prioritizing quick fixes over long-term solutions, leading to a backlog of technical debt? Suddenly, the low bug count becomes a false indicator – the code may appear stable on the surface, but it's a ticking time bomb waiting to explode.

Similarly, if cycle time is a key metric, teams might rush through tasks to reduce time, potentially sacrificing code quality and thorough testing. This focus on speed over quality can result in more defects and technical debt, ultimately slowing down the project.

Let’s explore how we can avoid falling into this pitfall in this blog.

The Pitfalls of Overreliance on Certain Metrics

When we focus too much on a single metric, like bug count, it can lead to problems that we might not anticipate. Let's explore why relying on just one measure can be risky in the world of software development:

1. Misaligned Incentives

Sometimes, metrics can push people in the wrong direction. For instance, if we only look at how many lines of code a developer writes, they might start cranking out lots of code just to hit that target. This can lead to sloppy work and more errors. Instead, metrics like code complexity or code coverage can encourage developers to write better, cleaner, and more maintainable code.

2. Gaming the System

If a single metric is used to determine success, oversimplifying or overcomplicating metrics can become a part of the system (over a period of time) to project the metric in a favored stance. It might sound harsh to call it that way but it would simply imply cheating the system. This could result in cherry-picking data or even falsifying results to meet targets. Such practices undermine trust and shift focus away from genuinely improving software quality and delivering value.

3. Tunnel Vision

Focusing on just one metric, like deployment frequency, can narrow our view. If teams rush to release updates without thorough testing, it can lead to buggy releases that frustrate users and harm the brand's reputation. A balanced approach, considering metrics like defect escape rate and user satisfaction alongside deployment frequency, provides a fuller picture and promotes a healthier development process.

4. Short-Term Focus vs. Long-Term Value

Metrics that emphasize immediate results, like the number of features shipped or bugs fixed, can distract from long-term goals. Prioritizing quick fixes might ignore deeper issues like technical debt, KTLO, etc. leading to bigger problems later. Metrics that reflect long-term value, such as time to market for key features or user retention rates, ensure that teams build software that meets user needs and delivers lasting benefits.

Making Metrics Work for You: Practical Strategies

Metrics are a powerful tool to assess software development success, but a multi-metric approach is just the first step. Here are some additional strategies to maximize their effectiveness:

1. Craft SMART Goals Fueled by Metrics

Clearly define your project goals. What do you want to achieve? Make sure these goals are specific and measurable, allowing you to track progress and definitively assess success at the end. Importantly, tie these goals directly to the metrics you choose. This ensures the metrics aren't vanity metrics (feel-good numbers) but actively guide your team towards desired outcomes.

For instance, instead of a vague goal of "improve user experience," aim for "increase user engagement by 15% within the next quarter, as measured by average session duration or number of features tried in the product."

2. Establish Achievable Yet Ambitious Targets

Goals should push your team to grow, and not break them. Therefore, the key is to strike the right balance.

Setting targets that are too low provides little motivation, while overly ambitious targets can be demoralizing. Involve your team in setting goals and targets, building a sense of ownership and buy-in. Leverage historical data and industry benchmarks to set realistic targets that stretch your team. Think of it as setting the bar high enough to inspire growth without being out of reach.

3. Ensure Alignment with Business Objectives

To make sure your goals have a meaningful impact, connect them to the broader business objectives. Metrics shouldn't stand alone—they should tie into the overall business strategy and contribute to the company's bigger picture. This not only gives your engineering team a sense of purpose but also shows how their efforts are directly contributing to the company's success.

Moreover, attaching clear deadlines to these goals creates a sense of urgency and helps you track progress over time. Time-bound goals make it easier to measure success and spot areas that might need adjustments. You can regularly review and update these goals throughout the project to ensure they stay relevant and reflect any changes in priorities.

4. Incorporate Continuous Monitoring and Improvement

Don’t let your metrics gather dust! Regularly check in to see if they’re still doing their job.

Are they truly reflecting your project's health? Are they encouraging the right behaviors in your team? As your project evolves, be ready to tweak your metrics to keep them meaningful and effective.

(Read more on Self-assessing engineering team’s health.)

Moreover, you can make data analysis a core part of your decision-making. Use the insights from your metrics to decide where to allocate resources, how to steer the project, and what to prioritize. Foster a culture where data guides decisions, rather than relying on intuition or gut feelings alone. This approach not only brings clarity but also boosts confidence in the decisions being made.

After hitting major milestones or releases, you can also hold post-mortems to see how well or how badly your metrics performed. Did they give you the insights you needed? Were there any surprises or unintended consequences? Use these reviews to identify any weaknesses and refine your approach to metrics for future projects. This continuous learning process ensures that your metrics stay relevant and your projects stay on track.

Implementing Balanced Metrics: A Compass, Not a Destination

While focusing on single metrics can be tempting, effective software development requires a more nuanced approach. Imagine a ship navigating by a single star – it might get you there eventually, but it's risky and lacks the precision offered by a constellation of instruments. Balanced metrics are our constellation, providing a comprehensive view of the software development process.

To achieve balanced metrics, we can leverage two powerful frameworks: SPACE and DORA. Each offers a unique lens through which to assess our software development efforts.

1. The SPACE Model: A Holistic View of Team and Project Performance

The SPACE model focuses on five key dimensions that paint a well-rounded picture of your team and project:

- Satisfaction (S): Track both developer and customer satisfaction. Happy developers are more productive, and satisfied customers translate to a successful product. Utilize surveys, pulse checks, and customer reviews to gauge sentiment.

- Performance (P): Measure system performance using metrics like response time and uptime. A well-performing system translates to a smooth user experience.

- Activity (A): Monitor the volume and nature of work completed, like features shipped and lines of code written. However, ensure a balance between quantity and quality by incorporating code coverage or complexity metrics.

- Communication (C): Effective communication is crucial. Track metrics like code review participation or use of collaboration tools to assess communication effectiveness.

- Efficiency (E): Evaluate resource utilization by tracking deployment frequency, lead time for features, and rework rates.

By considering these interconnected aspects, the SPACE framework helps us navigate towards a more holistic understanding of team performance.

2. DORA Metrics: A Lens on Delivery Performance

The DORA metrics on the other hand focus specifically on the efficiency and stability of software delivery. These metrics provide valuable insights into areas like:

- Deployment Frequency: Frequent deployments can indicate a smoother development process and faster time-to-market.

- Lead Time for Changes: A shorter lead time reflects a more efficient development pipeline.

- Mean Time to Restore (MTTR): A lower MTTR signifies a more resilient system and faster resolution of issues.

- Change Failure Rate: A low change failure rate indicates a stable development process with robust testing practices.

Remember, balanced metrics are a journey, not a destination.

By implementing these frameworks and continuously refining our approach, we can mitigate the pitfalls of Goodhart’s law. These frameworks provide a more balanced and holistic view and prevent teams from developing tunnel vision.

By adopting these practices, we move beyond the limitations of single metrics and empower our teams to become active participants in shaping a culture of continuous learning, innovation, and ultimately, delivering high-quality software.

Steering Clear of Goodhart's Law: Building a Culture that Inspires

Metrics are powerful tools, but they shouldn't dictate our every move. Just like a doctor uses tests to diagnose an illness, we should use metrics to identify areas where our engineering team can improve and then develop strategies to address them. I am not surprised Goodhart's Law serves as a good reminder that focusing too much on hitting specific targets can lead to unintended consequences.

The key is balance.

Instead of just one metric, we need a diverse set that gives us a complete picture of how we're performing. This helps us avoid tunnel vision and helps leaders ensure that all critical dimensions of development are being monitored and improved.

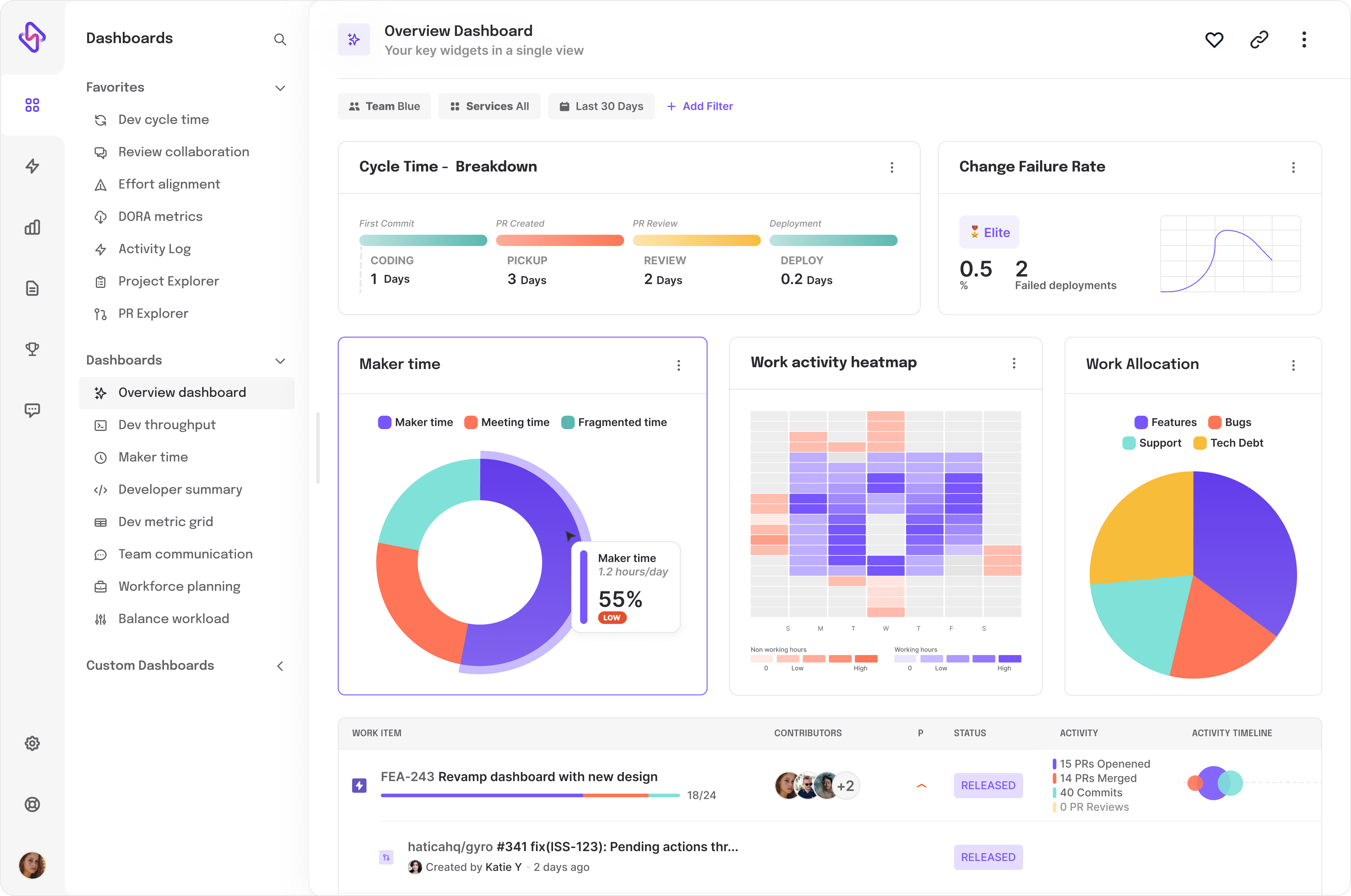

With an engineering management platform like Hatica, you can avoid the pitfalls of Goodhart's Law and build a culture of continuous improvement. If you’re looking to see how engineering analytics help you with this in real-time, then our productivity experts can help you get started!