The past few years have been a rollercoaster for engineering teams. Budgets grew, salaries rose, teams expanded, and cloud bills became an unavoidable reality. But one question keeps coming back to me: “Are we really delivering value?”

For a long time, it was easy to push that question aside. When the economy was booming and resources were plentiful, the focus was on shipping features and scaling fast. But things have changed. With tighter budgets and more scrutiny, it’s no longer just about building more—it’s about building smarter and proving real impact.

This shift has me thinking about how we measure, monitor, and track progress. These may sound similar, but they each play a unique role:

- Measuring helps us understand where we stand.

- Monitoring surfaces red flags across the SDLC before they become problems.

- Tracking shows patterns that can reveal gaps or inefficiencies.

Every company approaches this differently. Some stick to tracking basics, while others dive deeper into connecting metrics to business outcomes. The difference comes down to how clearly you understand what “value” really means for your team.

As we head into 2025, I’ve been reflecting on this a lot. What does productivity actually look like for engineering teams today? How do we measure success in a way that focuses on outcomes, not just output?

So, here are some thoughts I’ve been reflecting on—things I’ve seen, challenges I’ve faced, and the opportunities I believe we have to measure what really matters.

1. Doubling Down on Developer Experience (DevEx)

Friction is the enemy of progress. Whether you call it DevEx or platform engineering, the focus remains the same: eliminate distractions and empower developers to focus on building and delivering impact.

In 2025, this focus will sharpen. For years, Developer Experience felt intangible. It’s impossible to ignore now: a developer’s day-to-day experience shapes everything—from how smoothly an engineering team operates to how fast they can deliver. But measuring that experience? It’s still a tough nut to crack.

There are some metrics that help—like onboarding time (how fast new hires can contribute) or context-switching frequency (how often developers get interrupted in a typical workday). But the things that really keep teams thriving—mentoring, improving processes, or writing great documentation—often don’t get the recognition they deserve. These are the behind-the-scenes efforts that hold everything together.

Are our tools actually making things smoother, or are they unintentionally creating roadblocks?

How do we notice and appreciate the “glue work” that’s so essential but doesn’t show up in typical metrics?

It’s not about having all the answers—just starting to notice these things has made a big difference in understanding what really supports the team. It’s made me rethink how we define progress.

2. Stop Guessing What Developers Need—Start Listening

Too many leaders assume they know their teams’ pain points, but 2024 taught us a hard lesson: assumptions can backfire. Misguided AI investments often promised efficiency but delivered chaos—ignoring critical issues like technical debt, slow build times, poor documentation, and a lack of focus time. The result? Teams were left battling the same problems, just with shinier AI tools.

Having worked with diverse teams and across varying cultures, one thing is clear: reactive leadership is a trap. Far too often, leaders rush to implement the latest tools or processes without asking the most important question—what do our engineering teams or developers actually need?

Take AI-powered solutions, for example. They sound revolutionary, but without fixing the basics—like inefficient builds or unclear priorities—they only add layers of complexity and frustration. It’s a classic case of solving the wrong problem or a right problem the wrong way.

Great leadership doesn’t guess—it listens.

Lately, I’ve found myself asking:

- Are our tools actually helping developers, or are they just adding more noise?

- How do we shine a light on that glue work—the work that doesn’t make it into dashboards but makes everything else possible?

These questions keep coming up because they matter. The little things we overlook are often the ones that make the biggest difference. If we don’t figure out how to measure and celebrate them, we’re missing a huge part of the picture.

3. Leading with Outcome Driven-Engineering Metrics

Platform teams are at a pivotal crossroads: prove your value or face cuts. The era of measuring engineering success by lines of code or basic activity metrics is behind us. Today, it’s not about how much work gets done—it’s about how that work moves the needle for the business.

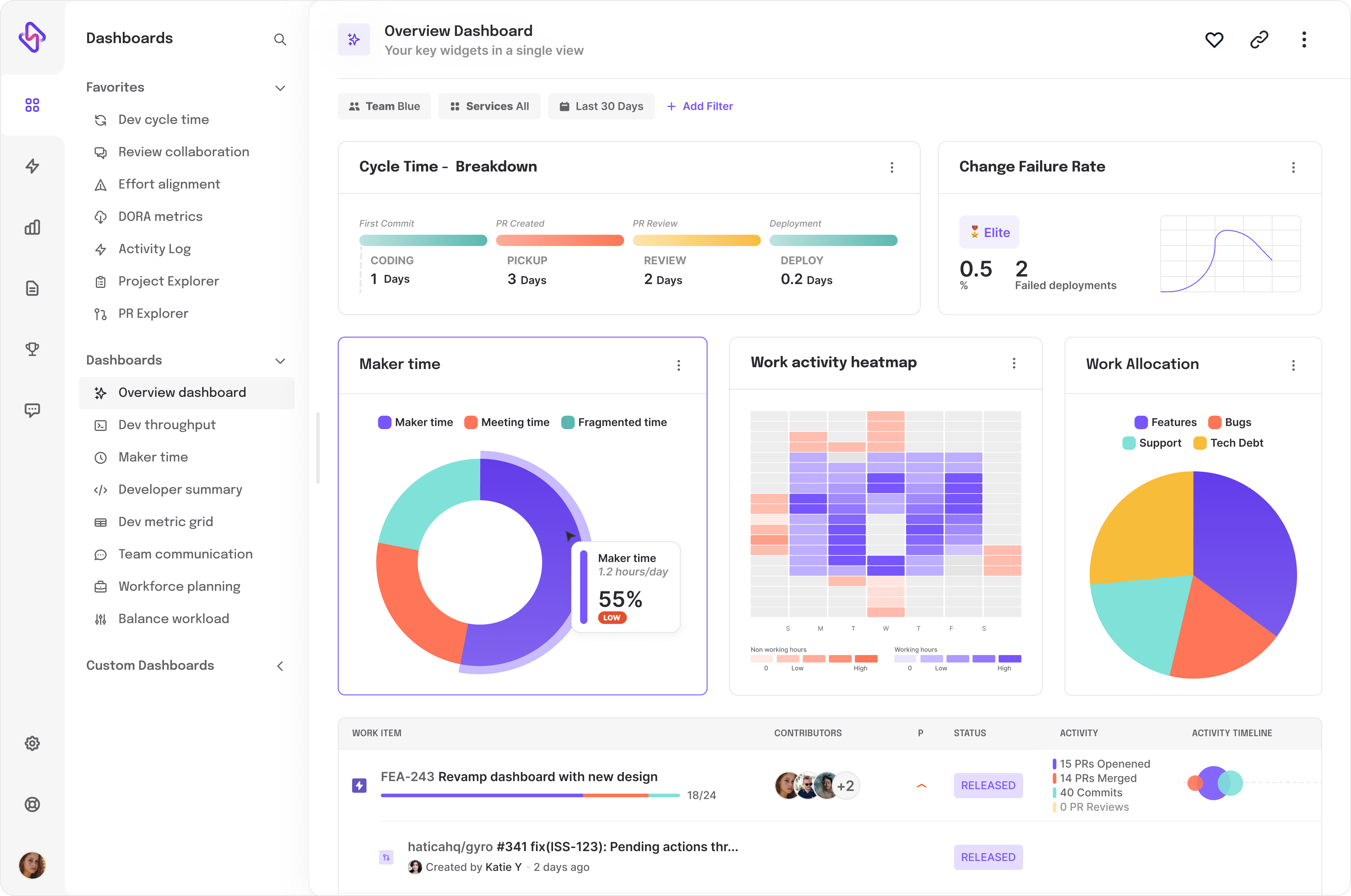

Leading organizations are embracing frameworks like DORA, SPACE, and the DX Core 4 to bridge engineering efforts with business outcomes. But here’s the challenge: efficiency isn’t the same as effectiveness. While metrics like deployment frequency and lead time tell us how fast we’re shipping, they don’t always tell us if we’re shipping the right things.

Let’s break it down with an example: imagine a team deploying features weekly. Impressive, right? But what if those features don’t drive user engagement or improve revenue? What have you really accomplished? The problem isn’t the metrics—it’s how we interpret them.

The shift happening in 2025 is toward outcome-driven engineering metrics—metrics that connect engineering efforts directly to business goals. For instance:

- Time Allocation Ratios: Tracking the balance between innovation and maintenance can be revealing, but it’s the definition of “maintenance” that matters. Is refactoring old code an investment in future speed, or is it just deferred toil?

- Feature Impact Metrics: Rather than simply measuring feature delivery, link engineering efforts to user retention, engagement, or revenue growth.

These shifts aren’t easy. They force leadership to confront tough questions and trade-offs we’d often prefer to avoid. But that discomfort is a signal—you’re asking the questions that matter.

The leaders who lean into this discomfort will redefine how engineering is valued. Proving impact isn’t just about metrics—it’s about showing how engineering drives real business outcomes. The future belongs to teams that make this connection crystal clear.

4. Asking the Right Questions

What I’ve learned is that the most valuable insights often come from asking the right questions—not complicated ones, but the ones that cut straight to the heart of the matter. Instead of asking, “What are we measuring?” I’ve started asking, “What decisions will this metric help us make?” It’s a subtle shift, but it’s fundamentally changed how I think about metrics and their purpose.

For example:

- Are we building features that genuinely move the needle for users, or are we just crossing items off a backlog?

- Do our developers have the clarity and tools they need to focus on, or are they drowning in unnecessary complexity?

- Are we truly balancing innovation with maintaining the systems that keep everything running smoothly?

One approach that’s had a lasting impact for me is simply asking developers to rate their own productivity—and then watching how those ratings change over time.

When combined with system-based metrics, these self-assessments paint a much clearer picture of what’s working and what’s not. It’s not just about numbers on a dashboard anymore; it’s about the human stories behind those numbers. It’s about understanding where we’re falling short, what’s creating friction, and where we have opportunities to enable teams to do their best work.

As a founder, I believe this is what leadership is all about: asking the questions others overlook, connecting the dots between people and performance, and making decisions that drive meaningful outcomes for the future—not just outputs. That’s how we move from measuring work to truly amplifying impact.

5. Metrics That Uncover, Not Simplify

One of the most dangerous traps in engineering is our obsession with simplifying productivity into “convenient-looking” dashboards and “over-simplified” KPIs. The reality is that engineering work is inherently messy, and attempts to reduce it to a single number - without building context into what you are measuring - often do more harm than good.

Take “invisible work,” for instance—the untracked hours spent mentoring, maintaining stability, or improving processes. These contributions are invaluable, yet they’re often the first to be overlooked in performance reviews or quarterly metrics.

The question I’ve been grappling with is this: How do we value the work that doesn’t directly translate into deliverables but is essential for long-term success?

One approach is to shift the conversation entirely. Metrics shouldn’t be about enforcing accountability; they should be tools for revealing where we’re getting in our own way. For example, tracking self-reported productivity alongside system metrics often uncovers mismatches between how teams feel and what the numbers say.

So, How Can You Approach Engineering Metrics in 2025?

If there’s one thing I’ve learned and keep sharing within my team and ecosystem, it’s that metrics are just the beginning. They’re a tool for sparking conversations, not the end-all-be-all. The real work comes in interpreting what the numbers mean and figuring out how to act on them.

I’m still figuring out some of this myself, but these are the questions I keep coming back to:

- How do we highlight the value of invisible work?

- How do we align engineering metrics with business goals without stifling innovation?

- And most importantly, how do we create workplaces where developers can thrive while delivering meaningful results?

This journey has been as much about learning as it has been about doing. And that is what we are busy building at Hatica for engineering teams across the globe.

I’d love to hear how others are rethinking productivity in their own teams—what’s working, what’s not, and what we can learn from each other. How are you approaching this in your organization in 2025 and beyond? You can always hit me up here over Linkedin. Happy to talk and learn more about it from you.